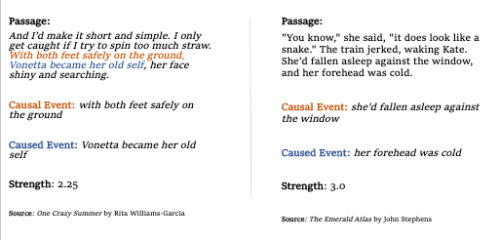

The paper titled “Causality Mining in Fiction” was published in the Proceedings of the Text2Story’22 Workshop, Stavanger (Norway) last month. Utilizing a new literary dataset and a SemEval dataset, Margaret—alongside co-authors Prof. Andrew Piper and undergraduate student Dane Malenfant—explores how we can use NLP to detect these causal relationships within a literary text, particularly in contemporary literary works. Given their findings, Margaret suggests that future research might study how causal relations are encoded differently across different texts, text types, or textual communities.

Margaret et al’s research project ties to prior research in narrative studies that shows that causality is fundamental in understanding what we read. Thus, the original interest of the researchers was to look at causality as a fundamental part of the interpretation of narrative texts. Margaret explains that one of their main objectives was to test how NLP can be used to detect and understand causality both at a broad scale or across a single text. Prior research in the NLP community covers a multiplicity of disciplines and contexts but lacks approaches to causality in literature, and this project starts to fill in that gap in NLP research. Margaret and her co-authors create baseline measurements for model performance, so they can continue to research causality in literary texts. They see it as a first step for future research in causality.

The contributions of this project as explained by Margaret encompass developing computational models that detect causality between narrative events and the presence of causal association within a sentence. She believes bringing in computational methods and dissecting how and where they work well can help answer bigger questions such as What is causality? and What is its role in narrative? Margaret explains: "If we understand causality, we might be able to theorize on why certain texts are convincing based on the sequence of causally related narrative events. For instance, why certain narratives around the cause of the Covid-19 pandemic are more persuasive than others." Margaret et al’s project will thus enable other interested scholars to start testing their theories on how causality operates in texts or across texts.

We have shown that you can model and detect causality using computational methods. Other literary scholars can now attempt to prove and test their own hypotheses utilizing our proposed methods. Applying NLP to literary texts also contributes back to the NLP community, because it provides insight into where NLP models trained on non-literary text might fall down.

Research from the .txtlab

Margaret first got involved in this project through Prof. Piper, who had previously started a pilot project and collected data with the help of a team of undergraduate annotators, who performed the labelling stage of the research. Margaret and Dane were enrolled in a class on NLP tools and, after conversations with Prof. Piper, decided to work on this project to apply what they had learned in class. The project will now continue in the context of the .txtlab and involve a greater research team to run another round of data collection and develop more rigid annotation guidelines for what constitutes a narrative event. Margaret is excited to apply their models to detect causality to different genres and time periods. She is particularly interested in how people use causality as a tool of persuasion, that is, to persuade others to believe something communicated through different forms of media, such as news. In that sense, Margaret’s main interest is causality detection at scale.

Working with NLP in DH

NLP stands for Natural Language Processing, an application of artificial intelligence, particularly machine learning and deep learning models, that allows for textual analyses performed on large amounts of text. Margaret et al’s project designed two different types of models for causality detection, including one that employs deep learning techniques. For Margaret, NLP is an ideal field to cross-analyze different genres, media, and texts. It comes, however, with certain shortcomings, especially in terms of access, since “the state of the art of NLP is not always open source and available to experiment with, so you might have to replicate model architectures.” Nevertheless, Margaret explains that the greatest advantage of NLP tools is that “you can look at a large volume of texts and analyze how features of narrative, like causality, work across genres and textual communities.” Margaret considers that causality helps people approach a text and NLP allows for a better understanding of that approach. She considers NLP a fundamental part of DH.

DH is using computational tools to study cultural objects and phenomena. Any time researchers are using computational tools to gain a deeper understanding of a cultural product, that’s DH.

Reflecting on the important community behind NLP tools and research, Margaret advises her fellow DHers to reach out to the bountiful online community around NLP and other DH fields: “There’s always people willing to help.”